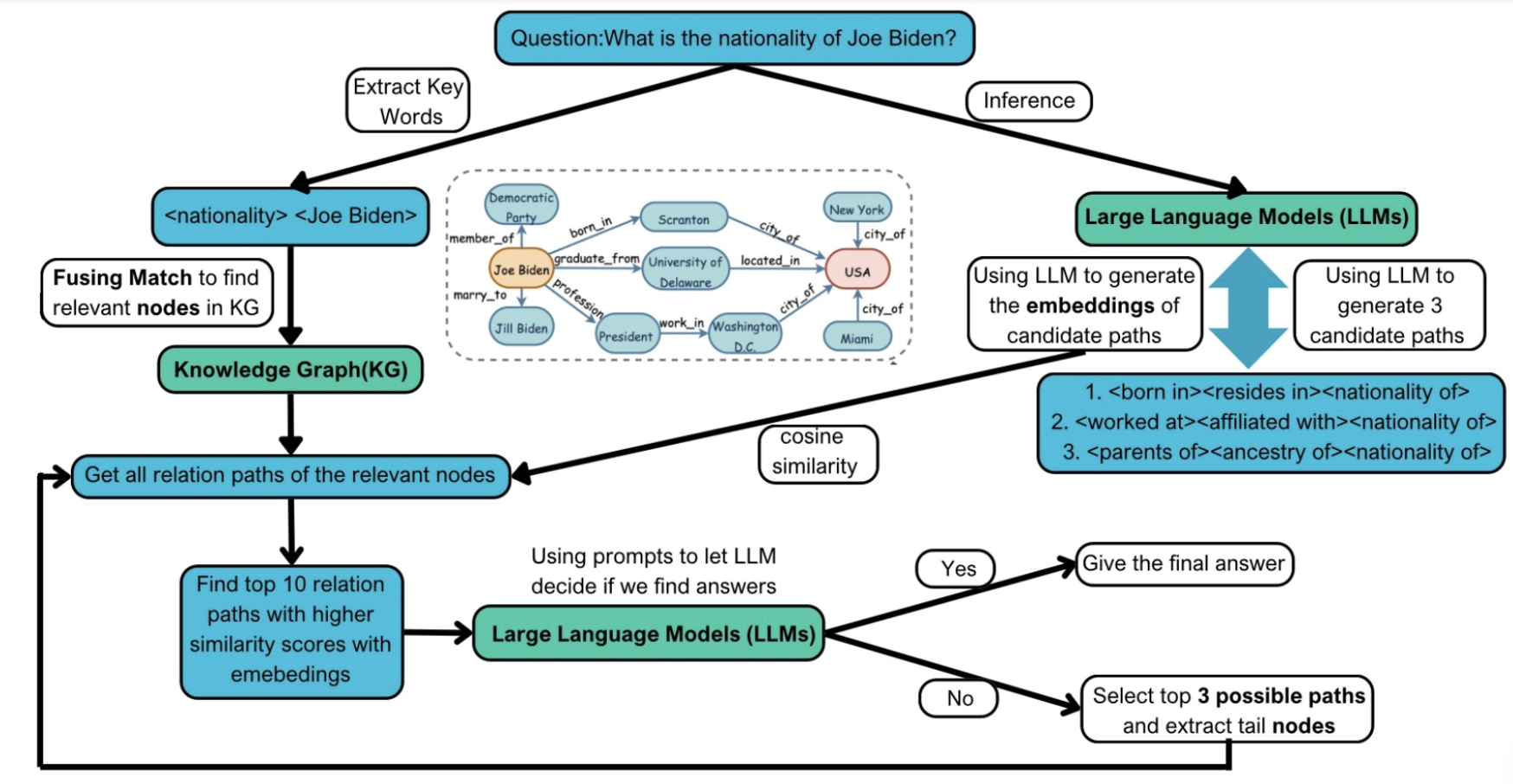

Improve LLM Faithfulness with KG

I am currently a master student in EECS at UC Berkeley. For my undergraduate studies, I recently graduated from the University of Michigan with a major in Data Science and completed a dual degree in Electrical and Computer Engineering at Shanghai Jiao Tong University through a joint program. I used to work in Statistics & Optimization for Trustworthy AI (SOTA) lab group to explore more about LLM and Reinforcement Learning. I am also working in Foreseer Group to explore advanced research in LLMs for scientific discovery, focusing on innovative AI methods and their applications in data analysis and knowledge generation. I aslo work with Prof.Z.mao to explore the potential of AI security.

MapExplorer: New Content Generation from Low-Dimensional Visualizations KDD 2025 Accepted

Xingjian Zhang, Ziyang Xiong, Shixuan Liu, Yutong Xie, Tolga Ergen, Dongsub Shim, Hua Xu, Honglak Lee, Qiaozhu Mei

MASSW: A New Dataset and Benchmark Tasks for AI-Assisted Scientific Workflows NAACL 2025 Accepted

Xingjian Zhang, Yutong Xie, Ziyang Xiong, etc.

Safeguard is a Double-edged Sword: Denial-of-service Attack on Large Language Models ACM CCS-LAMPS Accepted

Qingzhao Zhang, Ziyang Xiong, Z. Morley Mao

Making Small Language Models Efficient Reasoners: Intervention, Supervision, Reinforcement ICML 2025 Workshop LCFM Accepted

Xuechen Zhang, Zijian Huang, Chenshun Ni, Ziyang Xiong, Jiasi Chen, Samet Oymak

I am currently a master student in EECS at UC Berkeley.